Traditionally, collaborative robot (cobot) manufacturers have placed the burden of integration on the shoulders of the user. In the case of integrating with the real world, this requires the user to map relevant features of the world (end-effector, surfaces, locations of interest, obstacles, etc.) to mathematical representations that the cobot understands (coordinate system, points, poses, planes, primitive shapes, etc.). This is a major stumbling block because many users don’t understand these representations; they are time-consuming, error-prone to enter, and not dynamic.

Making robots understand their environment

The project’s objective is to ease the deployment of automation solutions by making the robot “understand” its environment through the combination of active 3D sensing, actionable semantic world models, and Augmented Reality (AR). This will create a platform that will simplify the reliable deployment of typical cobot applications (e.g., pick & place, use of conveyor belt, etc.) and more complex applications. The solution will reduce the time, cost, risk, and specialized knowledge required to successfully deploy a cobot. The project will investigate the active use of 3D sensing, an actionable semantic environment model of the cobot, and novel User Interfaces (UI) for cobot programming.

Research topics

It is an Industrial PhD project, with the University of Southern Denmark as the host university and Universal Robots as the host company.

The project consists of 3 research topics:

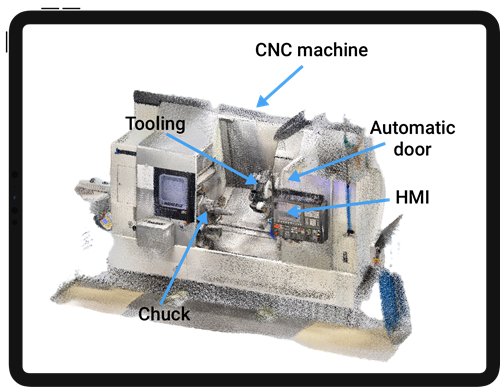

- 3D sensing - Simultaneous Localization and Mapping is used to obtain a detailed environment model of the robot’s workcell. Additionally, different representation methods will be evaluated,

- Actionable semantic world representation – this project will approach the programming of cobots that are enabled to understand how objects “work” and how the cobot can handle them,

- Augmented Reality - this project will build on top of the knowledge of previous work with a focus on creating an easily approachable UI for mobile and wearable AR, and evaluation of users’ mental payload.

Faster and cheaper robot applications

The expected outcomes of the project are a significant reduction of deployment time and costs of typical robot automation applications. Through this solution, integration costs can be partially or completely removed, hence increasing the Return of Investment of Small and Medium Enterprises.

Project period

2022-2024

Partners

Funded by

PhD Supervisor

Main supervisor: Mikkel Baun Kjærgaard

Co-supervisor: Christian Schlette